Numb Res by CNCD & Fairlight

pouet exe version video video (anaglyph 3d) youtube vimeo

Begin

It was easter. We made a new demo for The Gathering 2011.Yea, that’s right – in Norway, not in Germany. I really wanted to do a new demo because I’ve been collecting new routines all winter, and it was high time they got into the wild. So about 3 weeks before easter Jani and I started bouncing ideas around (“something with fluids” was the sumtotal of that I think). Then we went on the hunt for music. As some may know, we don’t have an active musician we work with regularly in Fairlight or CNCD anymore; we have to outsource. So I dropped a message on facebook half-jokingly asking if anyone had a spare soundtrack. I’m not sure whether that was a good idea or not but I spoke to Ruairi (RC55), who put me in touch with Tom Wright (aka Stereo Wildlife). He’s produced a beautiful new album and agreed to let us use one of the tracks – and even did a bit of remixing to make it fit the demo. So, music was ready from day 1. This is such a huge bonus when making a demo; it meant we could completely design around it, plan out what scenes we wanted straight away and know they’d fit.

The demo was envisaged as a “small project” – a relatively low budget production. Low budget meaning less development time, fewer resources. Weeks to make by a small team. Frameranger for example is a very “high budget” demo – lots of people, over a year in the making, tonnes of art assets and specifically made effects, and lots and lots of wasted work. This one is very different; there’s only one hand-modelled mesh in the whole thing that’s “rendered” properly (the head at the start and end), although there’s lots of meshes used for other things in the demo. We wanted an effect-led production. The first thing that happened was that Jani designed the numbers scene in Lightwave: creating meshes for each number, placing them in the scene, timing them and making a camera path for the whole lot. Meanwhile I was working on effect development. Then Jani developed the introduction part with the head more or less on his own, and modelled and tweaked the tracks for the fluid parts while I worked on fleshing out the numbers scene with elements and effects. Then we integrated and worked together to finish. With a week or so to go there was a touch of panic and it looked like we weren’t going to get there; but in the end we found ourselves more or less done 5 days before the competition. For once we had time to polish, tweak and optimise. Hope it shows..

As an aside: the Gathering was a great event for us not least because they also held the Scene.org Awards, which recognises the best demoscene productions from last year. We got 11 nominations and after a very rock & roll ceremony full of glitz and fireworks came away with 4 awards: Ceasefire for best music, Agenda Circling Forth for best effects, technical achievement and the cherry on the cake: best demo of 2010. Ooooh. Apparently we just missed out on Public’s Choice by a few points – but hey, no accounting for taste.. 😉

32. Particles. Again?

I’ve realised over time that I’m not really a traditional “democoder”. I’m a graphics researcher who happens to prefer to show his new work off in whatever demo we make next. That probably goes some way to explaining why I do things the way I do: researching and improving on certain areas (like particle systems or fluid dynamics. but not ribbons. bitches.). Some would say that fluids or particles are effects: you “do” fluids for a scene in a demo, then you go “do” something completely different. I don’t subscribe to that. For me the achievement in a demo like this is not to implement fluids: we first used fluid dynamics in a demo 5 years ago. The challenge is to move the field on – to do something new with it that nobody else has managed to do in realtime yet, or not on the same scale. Of course there’s a point where this gets lost on the viewer, and maybe it does just become “nice particles” to the uninitiated.

Although the natural reaction of some people will be “oh, particles again – nothing new!” – this is probably the biggest technical leap we’ve made for a demo since Blunderbuss. Instead of concentrating on the amount of particles and simply using them to render 3D scenes with a few modifiers on top, we concentrated on the cleverness of the particles: the simulation itself and the rendering/shading. In this demo the particles are smart. They’re going somewhere.

Particles are just a primitive like polygons or lines – not interesting in themselves. Creating and rendering a lot of them is easy. Making them do something interesting and look good is a completely different kettle of fish.

So lets talk about what we did this time to make particles do something interesting and look good..

93. Smoothed Particle Hydrodynamics (SPH)

SPH is a form of fluid dynamics which uses particles for storing the fluid and the transport of the forces/densities, rather than a grid. This allows you to represent more detail at higher resolution than a grid would allow given the same memory / performance limitations, it’s not limited to a certain area of space, and it makes collisions more practical and it’s a better fit for liquid effects. It’s the scheme used in professional offline packages like Realflow, used for all those nice liquid splashy effects you see in ads and movies – which take hours to simulate, let alone render. Good SPH is for me one of those holy grails of effects development (like realtime radiosity). The thing is, the quality and scope of effects you can do with it is directly dependent on the number of particles – and so is the difficulty in pulling it off. If you have a few thousand you can make some droplet effects; with 10s of thousands you can make some nice splashes; and with 100s of thousands or millions, you can start to make really amazing running water simulations.

The problem with SPH in realtime is it’s really really hard. The simple explanation of the algorithm is: “take all the particles near my particle and perform some force exchange between them”. The force exchange is easy; the “all the particles near my particle” is a bitch. On GPU it’s even more of a bitch; and in 3D it becomes an order of magnitude more of a bitch.

Other demos have featured SPH before; FR-063 performed it on the CPU with (what looks like) between 1000-10000 particles. The current bleeding edge for 3D SPH in realtime is around 250,000 particles, working on a top end GPU using CUDA and with simple point rendering (and no effects or anything else on top). The current bleeding edge for 3D SPH on DX9 – i.e. with no compute shader / CUDA – is erm.. I dont actually think it’s been done.

The problem is simply the neighbourhood search. You end up with a variable amount of fast-moving particles affecting each particle, where it’s hard to pick an upper bound – so the spatial database is hard to construct. If you solve the neighbourhood search, you can solve SPH.

The demo features up to 500,000 particles running under 3D SPH in realtime on the GPU, with surface tension and viscosity terms; this is in combination with collisions, meshing, high end effects like MLAA and depth of field, and plenty of lighting effects. On DirectX9. It’s fast. Almost impossibly fast. How? We found a new approach to SPH where we can re-form the neighbourhood search term to something much easier to solve on a GPU. Meaning we can, honestly, get very close to what a program like Realflow can do over hours of simulation – but in realtime. And that, for me, is what demo coding (and realtime graphics) is all about.

There are 4 scenes which are directly showing “fluids” in the demo; a couple more using SPH in places for the great quality it has that it makes the particles spread out really nicely rather than bunch together randomly. In each of the fluid scenes it’s basically a load of particles dropped at the top of a very long track, and left to get on with it. The camera captures only a part of the action at any time – the great battle of “design vs showing off code” resulted in something that probably doesn’t completely sell the effect, but it does make something more enjoyable to watch. And that too is what democoding is about..

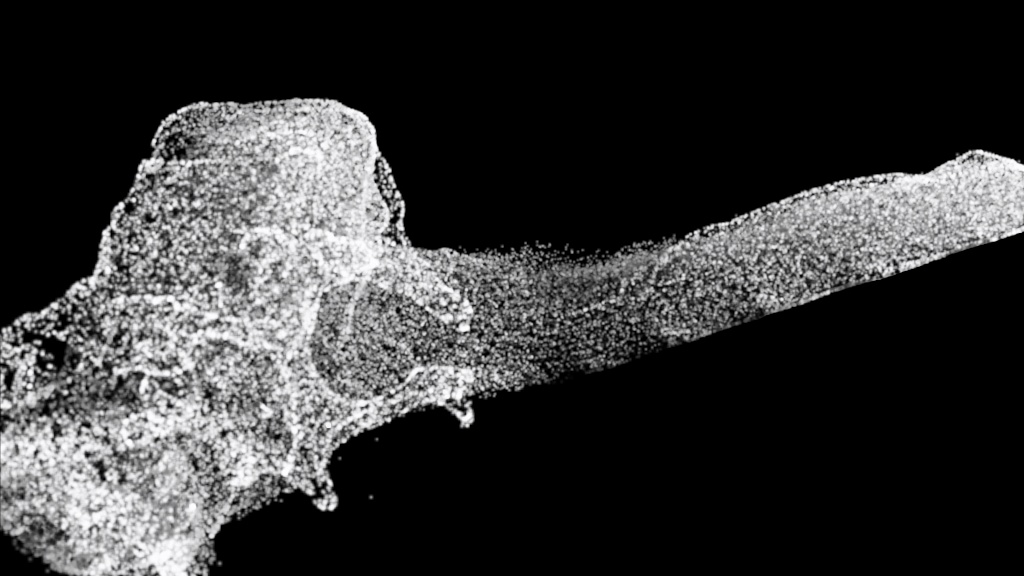

I thought it’d be nice to show it in isolation, so I put a couple of screenshots and a video above. Aside from that one embedded video – apparently wordpress is a little bitch and won’t let me embed more than one video link into a blog post – you can also check the reverse angles here and here. Those and the above screenshots show an initial test shot we did with 3D SPH – we drop 250,000 particles, and let them run with SPH and collisions against a mesh (handled as a signed distance field). Look, it splashes about and shit like that. All completely in realtime. Oooooooh. If nothing else, being able to run it in realtime makes it a lot easier to tweak. You get instant results – you don’t have to wait for any simulations to calculate. In these days of youtube and the prevalence of netbooks, perhaps high end realtime graphics doesn’t have the same relevance to the audience that it did 15 years ago – but it sure matters a huge amount when you’re actually making something. The benefit to the workflow is huge.

12. Signed Distance Fields

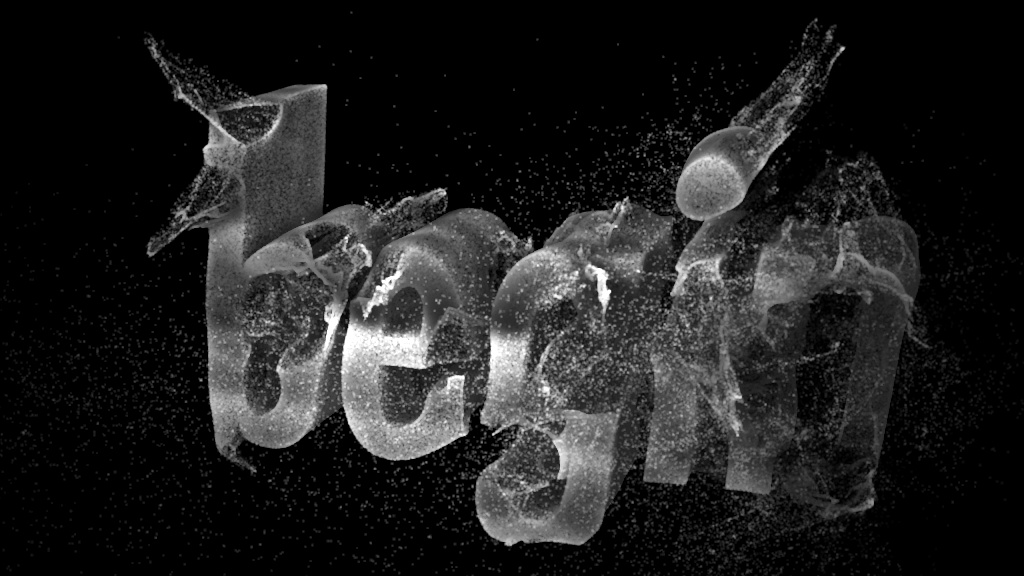

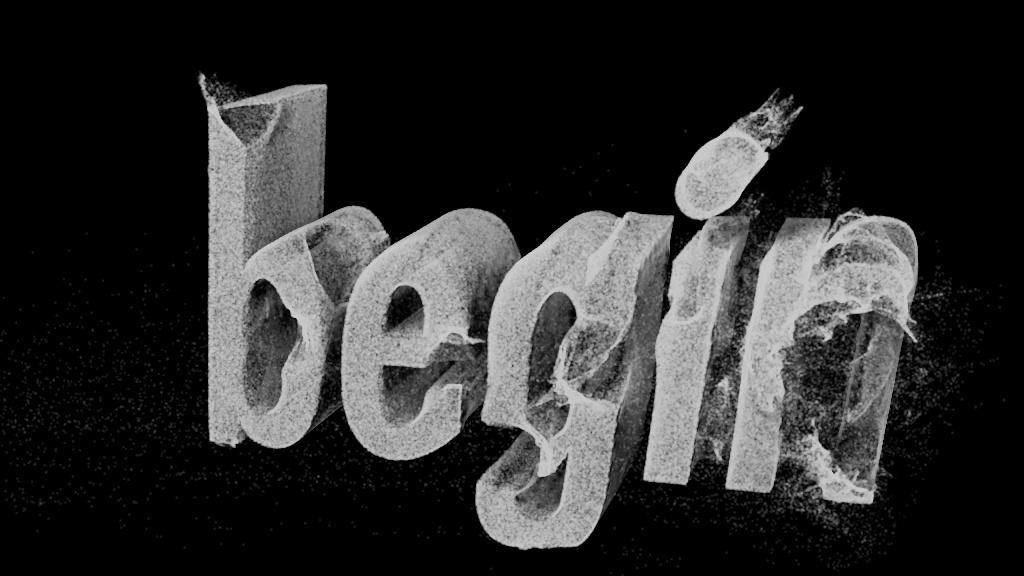

I touched on this for Ceasefire, but it was this production where we finally got them working and used them in anger: the use of signed distance fields for arbitrary collisions (and attraction) with particles. We take polygon meshes, convert them into signed distance fields using distance to triangle measurements and place the results in a volume texture, giving us the means for fast collision ray tests. This is absolutely invaluable when using fluid dynamics because otherwise the particles fly off merrily into space. So we have particles flowing around a head; particles flowing down a track carried by SPH; and particles being blown by a 3d fluid effect into the form of a word. All using signed distance fields.

We used them for a lot more besides particle effects, though. They’ve become an integral part of our rendering pipeline. That will become more apparent the next time we do something featuring a lot of solid 3D.. but they’ve opened up a lot of doors.

One clear example of SDF usage comes in the first “fluid” scene – falling drops collide with invisible words. This also neatly demonstrates the “art vs code” issue – we’re simulating 250,000 particles under SPH running down a long 3D track, and the camera shows a small subsection of those. The collision with the words actually uses two affectors: we used a collision node to make the particles bounce off the 3D words (using an SDF version of the mesh), which worked great – but it means you only see the top of the words. 🙂 So we added a second affector – a low weighted mesh attractor which pulls the particles towards points on the faces of the mesh. This helped the particles slowly run down and also pulls them in from 3d space towards the words. It also added to the surface tension effect by keeping them attracted to the words even after they fall off the end.

65. Particle Shading

In my original post on my particle system a year or more ago I talked about how we had support for opacity shadow maps for self shadowing on particles. Since Blunderbuss we didn’t actually use that much – we’ve mainly got away with unlit particles, using the shading and lighting from the source meshes. But I’ve been working on some new techniques and had to make use of them..

The major problem with opacity shadow maps is depth aliasing – you only have a limited set of depth samples (16 in my case) for which to represent the scene, and it’s not enough. They tend not to be spread evenly across the particles either. So I tried a few new methods:

252. Volume Shading

This method borrows heavily from slice-wise volume rendering: the particles are sorted in light space by depth, nearest to furthest, and rendered in slices to composite the image. In this case though we only care about the shadow result: the values are written into the per-particle shading buffer used in the final particle render.

The sorted particles are rendered into the shadow map in batches – typically we used 64 batches per particle system. Per batch we additively render the batch particles into the shadow map, then project the shadow map onto the particles into the next batch: the value read from the shadow map is considered the amount of shadow on that particle from particles closer to the light.

This clever bit is, this method doesn’t care about the actual depth of the particle : it only cares about the position of the particle in the sorted sequence. No depth writes are required and transparency is supported without any problems. One additional benefit of the technique is that we can blur the shadow map a bit after each batch, giving a scattering effect. If one had the power to do it and could render one particle per batch, it’d give a perfect shadowing result. As it is, the batch sizes give some slice aliasing.

Unfortunately the slice aliasing was too much of a problem with large sytems and the technique is also a bit too slow – and generates a lot of render target swaps. So I came up with something better..

15. “Stochastic” Shadow Mapping

This isn’t the same as the stochastic shadow mapping paper that was recently presented, but the name makes a certain amount of sense for the effect anyway. 🙂 The basic idea is something I’ve tried a few times on and off since 2009. The idea is that if your particles don’t overlap pixels in view space, you could render them as solid – using regular shadowmapping and lighting techniques. Of course this is rarely the case in a render – because particle systems rely on lots of small elements overlapping and blending to look solid and nice. However, what if you do render them as single pixels and make them not overlap, and then perform a full screen 2D operation to upscale each point and make them overlap and blend?

We applied that approach to shadow maps generated from particles. The particles are rendered as single points to a very large shadow map; this gives us a reasonable chance that the particles won’t overlap. It’s just like a spatial hash – with a very simple hashing function and no collision handling.. Then, when sampling, we read from the map using a large kernel and sum up the amount of filled pixels which pass the shadow map test to give a shadowing result.

Stochastic shadowing in action, on something that is definately not an artistic interpretation of a sperm cell.

But there’s a twist: in order to improve the quality, cope with hash collisions and reduce aliasing, we perform a temporal reprojection step. When writing the shadow map each frame a random sub-pixel offset is applied to each particle which varies every frame; this means we get a different set of collisions, so different particles become visible each frame. Then when sampling the shadow map we blend the result with the previous frame, so the results adjust smoothly over time. By combining these two things we get a very nice, soft, reasonably alias-free shadow solution which is also efficient to render. No sorting required. The final shadow value per particle is written into a buffer and used at particle render time.

I also experimented with the technique for the actual rendering of the particles to the main frame – rendering single points with Z test and blurring the buffer out, with some per-pixel sorting during the composite, to create softened particles but without the need for a full particle sort. Unfortunately it didn’t give us the visual fidelity we needed; we relied on the blending of particles, the variable sizes and the sprites used. Could be more applicable in a future project though.

536. Meshing (Marching Cubes)

I suppose it’s the obvious step, isn’t it. Democoders love metaballs. Being able to render particles as meshes using metaballs is something we’ve wanted to do for ages because it moves us towards the “liquid” look – the Realflow-style look. We’ve been here before: in Frameranger we rendered around 50,000 metaballs in realtime by generating a potential field, converting it into a signed distance field and raymarching it. Results were promising but not perfect: being able to generate an actual triangle mesh has some side benefits, like being able to post process the mesh and adjust it with tension – something we really wanted to do to get closer to that Realflow look I keep going on about.

Marching cubes gives two issues to solve: generating the potentials, and then triangulating them. We already worked out how to generate the potentials some time ago for Frameranger, although a bit of work was required to scale it up to 250,000 particles. The second part is more difficult: you need to generate an arbitrary amount of geometry data from that potential field with triangle and vertex counts that change every frame. Naturally, we could quite easily make an implementation which just generates the worst case: treat every cell in the volume as if it was contributing triangles, then write degenerates for the invalid ones. That actually works – but it’s prohibitive for large volumes. One cell can contribute up to 5 triangles, and with a 128^3 volume we’d be looking at 10 million triangles – which isn’t great. 256^3 volumes would effectively be impossible. What we need is a way to only process and send triangles for the cells that are active.

This is problematic because we can’t generate index or vertex buffers on the GPU, we can’t generate drawcalls on the GPU (so we can’t vary how many primitives are rendered on the GPU) and we can’t use the CPU – because the potential field is on the GPU and it’d be far too slow to get it back to CPU. And even if we could, the CPU probably isn’t up to the task of generating the geometry fast enough anyway. And even if it was, we’d have to send all the triangle data back to the GPU again. So we’re stuck with the GPU – and yet we don’t have a way to vary the number of cells we render triangles for.

It seems impossible. However, Gernot Ziegler came up with a nice solution a while ago: histopyramids. This is a way of performing stream compaction on the GPU: it takes a big sparse buffer, and moves all the filled elements to the start of the buffer. A bit like a sort, but much more efficient. This gives us exactly what we need: we generate the (sparse) potential grid and use histopyramid compaction to move all the filled elements to the start. Then we use an occlusion query to count the number of active cells and use the CPU to generate batches which give enough triangles for the count to generate. The actual vertices are generated using a pixel shader and vertex texture fetch is used to read them.

Result!

4. Bokeh Glows

I’ve had this effect on the back burner for a few years but finally got to actually finishing it up.. Bokeh is the term relating to the effect of circular or shaped highlights in a depth of field effect, caused by inaccuracies in the shape of the lens of a camera. Or something. They make DOF look really nice. I’ve tried before by using a really big circular kernel for a regular DOF effect with an HDR input and leaving it at that and it actually does work, but I wanted to see if I could get some shaped bokehs and really overblow it. So I tried something with point sprites.

The basic idea is to work out where on screen bokehs would happen, and render point sprites at those points. I did this using the following method:

– Bilinear downsample the screen (in several steps), storing the 2d position (UV) of the brightest point of the 4 values of the quad that were read to a render target.

– Use those 2d positions to read a blurred version of the original frame. Perform some thresholding to pick out the points which pass. Generate colour values for the points.

– Temporally smooth positions and colours using positions from last frame, apply some attack and decay.

– Render a load of point sprites using vertex texture fetch to read the positions and colours, rendering the sprites to the screen. (With some additional magic to make it look good.)

72. Post Process Antialiasing (MLAA)

This is the first demo since 2009 (Frameranger, in fact) that we’ve released which actually features polygons being rendered as polygons. Happily, time has moved on, and so has our renderer. One of the major bugbears I had with the deferred renderer is lack of antialiasing – but fortunately a whole bunch of post process antialiasing techniques got invented in the last couple of years. MLAA is the technique du jour, and we use an implementation in our renderer. It’s great.

We do two little twists in our version to make it cool: firstly we use a lot of stencil optimisation so only the active edges get the big-ass shader applied to do the actual MLAA (or in fact get any of the process after the edge detect applied). And secondly.. there’s an ugly problem with MLAA in that it actually cocks up quite badly in a certain case. The technique relies on checking for horizontal or vertical edges. But where you have a pixel which is both a horizontal and vertical edge, it messes it up. Which breaks about 1/4 of the diagonal edges you have to deal with, so its pretty noticable. Our oh so clever technique for fixing that is.. do the MLAA twice. 🙂 The second time we flip the whole image in x and y, then MLAA it and flip it back. Genius huh? .. no? Well, it makes the polygonal scenes look good, and fortunately the stenciled version is so fast the extra hit isnt really noticable.

42. Stereoscopic 3D

We really wanted to do something with 3D for a while, but sadly we dont have any true 3D hardware (*cough* donations please *cough*). We decided quite early on that we were going to go for a pretty much black & white look – so it would actually be feasible to use the good old red / cyan anaglyph method. 3D isn’t as easy as just turning it on, though. It takes some effort to make it work well, give a good effect and not strain your eyes. We tuned it quite carefully and the setup of the scenes really helps – the first scene is slow and quite static so it lets your eyes adjust, the camera movements are quite smooth and in a single direction so they’re easy to track, and so on and so on.

Do watch the demo in 3D, it’s really made for it. We’re going to make a proper HD 3D video with left & right splits soon for those with real 3d setups.

End

I guess what’s interesting for me about this demo is that it was so much easier to make than many we’ve done. It just kind of came together; we started early enough, we got the music at the start, we didn’t have any major problems, nobody disappeared or dropped out, everything showed up on time, we didn’t completely overstretch ourselves and come up with some ideas that couldn’t be done, and we had time at the end to go over it and tweak and polish things, and we’re really happy with how it turned out. It’s like the way it’s supposed to go but never does. It doesn’t work for everyone (not very bombastic, you see) but it seems the people who got it really got it and like it, which is what matters. Maybe we’ve actually cracked it.. or maybe next time’ll be a royal screwup. Have to wait and see..

An amusing realisation hit me the other day. We’ve unintentionally managed to make a demo which is entirely full of sexual references. There’s a load of massive sperm cells; there’ what looks like a female gender symbol, made up of little sperm cells; there’s a load of sperm falling down and colliding off things; and then there’s a big river of .. well, it’s not much of a stretch in context to call that fluid “spunk”, is it? It only dawned on me after Dixan commented that it was “finally a good demo about semen” on pouet, and I started thinking about it.

Shit.