2008 rolled around, and it was time for us to get over the disappointment of Media Error’s poor public reception and try and do something, again, to try and win the Assembly demo competition – the thing that still eluded us after all these years. The major criticism of Media Error had been its flow – some people thought it was a series of unconnected scenes. To me the theme was obvious (obvious!!) – a TV flicking channels, and a thing about war – but apparently it was too vague for the average viewer, who needs to have his hand held from a to b. Apparently a demo only has “flow” nowadays if it either a) consists of one single scene, or b) is so covered in noise that it’s hard to argue what the viewer is seeing and therefore say if there is any flow or not. Making a demo with actual discrete scenes and a variety of content is apparently a complete no-no nowadays. But that’s another post entirely.

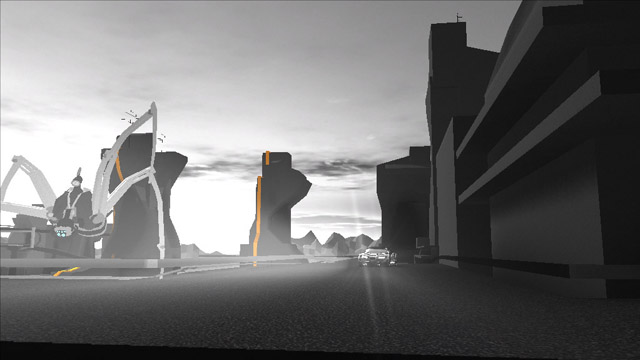

Anyway, we decided we did want to do something with a more clear storyline. The rough concept we settled upon was a “story” about a robot (car) coming to earth in a space ship, landing in an explosion of debris, driving to a city, battling a large spider robot and then transforming into humanoid form and finishing the job. Yes, it’s an 11 year old comic book/sci-fi nerd’s wet dream. But seeing as we’re “cool”, the clever part is it was all to be done with style, clever art direction and graphic design. Think the art house version of Transformers.

The second thing we wanted was a “demo part” – something seemingly unconnected to the main story where we throw in a load of big heavy effects to say “hah, we’re better than you”, in classic demo tradition – a homage to the demos of the early 90s, where a “design part” / graphics-heavy story section would give way to a series of vector effects which showed off how massive the coder’s balls are. That’s another problem with the demo-watching audience these days – sadly the average technical knowledge has dropped off quite heavily, so often the audience doesn’t realise if there’s an “effect” or some clever code at work unless there’s a massive red arrow pointing to it saying “clever effect here”, or if the effects are presented in such a way that it’s bleeding obvious that there’s a big effect there. Well, we’re “cool” so we don’t do that usually – we like it when the effects are seamlessly mixed into graphics and design, so you can’t tell exactly what’s going on – but it does lead to people missing the clever stuff. We decided to rectify that with the “demo part”.

It became apparent this demo was a pretty tall order. The scenes were massive and numerous; the animation requirements far exceeded previous productions; and the story / direction meant that it was a lot harder to chop and change – in most demos if you lose a scene you can fill it with something else, but if there’s a story to follow and you lose a scene the story stops making sense.

Assembly 2008 came around. Unfortunately I was busy fixing two other entries – a 4k and a 64k (Panic Room, which won the 64k competition) – so I didn’t devote as much time to the demo as was needed. In the end we all ended up, as has become tradition, in Destop’s apartment on the thursday night of Assembly. One small issue was that I had previously visited the party place on the way to the airport to drop off some friends, and I happened to stop by the Assembly sub-event “Boozembly”. Assembly is an event attended by kids of all ages, unfortunately including those under 18 – and the insurance and licensing difficulties mean that they have to make it completely dry – no alcohol is sold, or even permitted in the hall, something strictly enforced by the numerous security guards. In fact they even sometimes check your car for alcohol if you park in their car park. Fun and games ensue every year as people try and find ways to get alcohol inside – something I’ve managed just once, but that’s another story.

To make up for the lack of booze inside there is an unofficial sub-event that occurs on some rocks a few hundred metres away from the hall called “Boozembly”, which is usually a complete mess of drunks wandering around in the darkness falling over (and off) sharp rocks. I remember one year – I think it was 2003 – two members of Noice had ambulances called within hours of each other for different reasons. Anyway, I had visited the rocks while going to the party hall and had a few beers before heading to Destop’s – so by the time I got there it was very late, and I wasn’t going to be too productive.. the next morning we decided to make the sensible decision and give up. For once we felt the content was too good to waste on a rushed demo.

We set numerous deadlines for release. The first one that went by was NVScene a few weeks after Assembly; later we tried for Breakpoint at easter 2009. All came and went, and Assembly came around again. This time there was a sense we really had to make it. We cleared our schedules and dedicated some proper time for it. For the first time in years I wasn’t able to attend Assembly, so for once that weekend of crunch in Helsinki wasn’t going to happen – it had to get made properly on time. For once we actually planned it out. We had time – a couple of months. We had a lot of content and assets already done, a stable toolset, and we knew exactly what we needed to do.

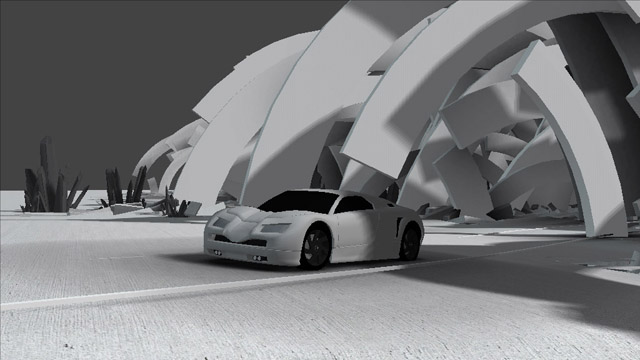

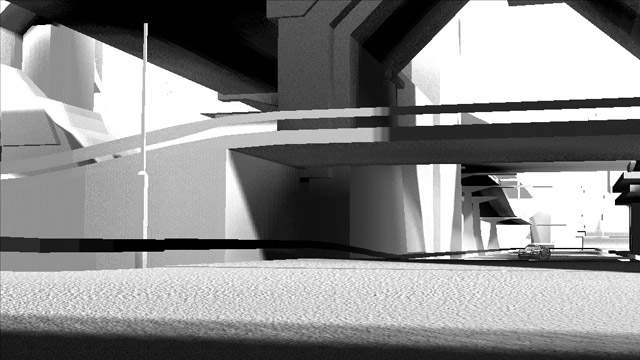

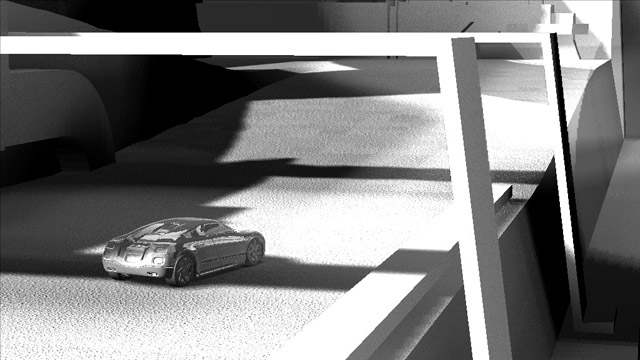

For this demo we tried a different approach to actually making it. For previous demos I’ve worked on, they usually followed a certain pattern. We made some graphics or effects; we set them up in the demo tool, and spent an age tweaking the lighting, shaders and layering on the post fx; and it remained like that until just before the deadline, when we quickly jammed in some cameras and animation in the scene and called it “done”. What that gave us was a load of pretty but boring scenes. Or worse, a lot of work is done on content that never gets seen by the demo camera. This time we wanted it to be different. We decided to focus on the weakest thing in our demos – the “action” – and get that done first. Post fx, lighting and shading are important, but ultimately a distraction – you can spend hours tweaking colours. It’s the easiest form of procrastination. We went for an approach that some gamedev teams use and tried to build the demo “in grey” – almost unshaded, no post fx, simple lighting and placeholders where necessary, to get the cameras and action right first. I actually believe this did work, and it’s a good way to go – it made us concentrate on getting some nice motions and direction down early, and we knew where the camera was going to be before we set up the lights, post fx and shading.

The challenge was still immense. We hit a lot of problems – technical, artistic and logistic. The number and size of scenes meant that our previous approach of baking lightmaps for ambient occlusion / global illumination wasn’t feasible – it would send us way over the 64mb limit – so I came up with a new lighting engine that ran fully deferred, and allowed us to use a number of techniques to approximate ambient occlusion in realtime. The old engine wasn’t set up for such a large amount of animation content and had to go through numerous optimisation passes just to make the thing load in a sensible amount of time. Some of the effects and the rendering engine were very heavy on render targets, so we had serious problems with lack of VRAM. We had major main memory problems too – we had never made such a large demo, and we found that we were hitting the 2gb win32 app limit in the demo tool, causing it to crash. I had to spend yet more time optimising the memory usage. I later discovered the major culprit was TinyXML – it ate 600mb to load a 30mb XML file (our demo “script” – which is generated and superbloated), and completely fragmented the memory with small allocations. My emergency fix was to optimise the XML file itself – yes, cutting down by hand the names of nodes and attributes – and got it down by more than 50%, which got us out of trouble until I rewrote the XML loader after Assembly.

One of the biggest headaches was the music. Fairlight (/CNCD/Orange), unlike many other demogroups, does not have one active musician who does all our soundtracks. We have a few musicians we work with but most have moved away from the scene onto other things – some went pro, some just got out entirely. In some ways it’s good because we are able to look around and find the right sound for each project, not be tied to what one guy can do, and we’ve had some really great soundtracks over the years by the likes of Northbound Sound, Little Bitchard, Ercola, Sumo Lounge and others. The problem is we’ve got no one person in the group who takes responsibility for it. I don’t think it’s an understatement to say this demo has been through the most difficult musical journey of any demo I’ve worked on. Over the year – 18 months it’s been an active project, we’ve had at least five musicians involved, and many tracks. It seems that the more brilliant the musician the harder they are to lock down – they always have other projects on the go and don’t have the time to dedicate to this. With a few weeks to go until Assembly we finally got Ercola (responsible for the Media Error soundtrack and a great producer and artist) involved. He was a guy we knew could turn it around very quickly and do a good job, which is exactly what we needed. Even so it was seriously nerve-wracking up until the last week before Assembly when we finally got the track. By the way, if anyone out there is a great musician give us a call, we are always looking for good musical input. 🙂

Frameranger contains a lot of graphics and a lot of code. There is a whole collection of effects and rendering techniques, some of which will get a blog post on their own. I decided to go for completely deferred rendering and it worked out great. As well as being able to use as many lights as we wanted it greatly reduced the number of shaders being generated by the ubershader (a major issue on Panic Room). I added the ability to combine multiple (dynamic) environment maps like lights in the deferred render, and support for secondary rays cast off the deferred buffers for ambient occlusion. In fact almost everything in the demo has ambient occlusion, generated one way or another in realtime through various techniques. One of the best things was being able to combine traditional polygon geometry and raytraced effects seamlessly – e.g. we raytraced the liquid effects straight into the deferred buffers and sent them through the same lighting pipeline as everything else, casting and receiving shadows etc.

Raytracing / ray marching popped up in numerous places. We used volumetric lights for the car headlights which were ray marched through the shadow map in screen space as a post effect. The surfaces for the fluids were raytraced on GPU too, using a technique I invented to handle almost unlimited numbers of metaballs (up to around 100,000). Of course there were other effects at work too – many post effects, particles, breaking floors and so on. However, most of them were mixed into the design and graphics so they were almost hidden away – it’s the kind of thing where you only notice them when they’re gone.

Fortunately we had the antidote to that – the “demo part”. My new fluid solver / renderer was ready at last for that. I had written a new 3D solver for fluids running on the GPU which used a new technique to get higher resolution and better performance: I evaluated the velocities at lower resolution – and even then the grids were still much larger than in Media Error thanks to modern GPU performance. Then I used the velocity grid to drive a procedural fluid flow solver to approximate the look of fluid eddies and mixed that with the velocity grid. Then I applied that to a high res density grid to push it around. The results were superb. The procedural flows weren’t tied to the grid resolution so they could produce really sharp results which didn’t lose detail. The velocity grid just had to handle the overall rough motion.

Then we had to do something interesting with it. In the end we used it for two effects – a liquid renderer driving particles and an isosurface which was raytraced, and a smoke renderer. Both had a full lighting and shadowing pipeline – giving us superb results. For both effects we were able to use voxelised meshes as the source input. We tried a few things for the smoke but in the end we used the effect to destroy some credits text. Unfortunately it was a prime example of artistic vs technical compromise, of which there is a lot in the demo. The scene didn’t show off the power of the effect to the fullest – it didn’t show all the clever features of the effect – but it looked really nice visually, with puffs of coloured smoke. Of course such things are completely lost on the audience. One genius commentor on pouet said about the scene, “nice plasma”. Nice plasma! It makes you glad you bothered with weeks or even months of work trying to innovate in the realm of realtime fluid dynamics, when your results are compared to an ancient demo effect.

One scene that worked out surprisingly well was the “pixel blocks” sequence. It was a simple effect – a grid of cubes, animated by rendering something to texture and using it as a heightfield – made “cool” by the use of raytraced heightfield-based realtime ambient occlusion which gave it the nice shading it had. Surprisingly it ended up as one of the most popular scenes in the demo, yet it was by far the easiest and took about an hour of work to put together on the saturday morning of the deadline.

A special word has to go to the work Destop did on the graphics and direction for the demo. He built most of the demo in our demo tool. The battle scene had around 40 cameras and a massive amount of carefully placed animations, and the whole scene contains 1000s of nodes where most scenes contain 10 to 100 – it’s by far the biggest thing I’ve ever seen made with the demo tool. It frightened me a bit actually. We also had Der Piipo doing a lot of modelling and animation work, and Mazor showed up with the 2D hud gfx at the end – just in time to fill some gaps.

Sadly, the demo still had problems. We knew the battle scene was the crux of the demo – make or break – and it was the biggest and hardest scene to do. A long action sequence – part built in Lightwave, part in the demo tool – with a lot of explosions and smoke. It was the smoke that caused me a huge headache. I went over and over this trying different solutions and none of them worked well. The requirements were: it had to fill a lot of space, with multiple explosions around the environment at once; it had to persist, so it didn’t fade out visibly; it had to fit with the meshes and lighting; it should look a bit stylised – not super realistic, but still cool and smoke-like; and the frame budget wasn’t massive for it as the 3D was already eating a lot of power. Those requirements meant I had to rule out something really cool like proper fluid dynamics – the scene was too big for grid-based effects. We could only handle a certain number of particles in the frame rate, and the lighting and shading would have to be faked. I tried various techniques and wrote the effect a few times, and it never quite worked out – so I kept putting it off. In the end I rushed something out in a couple of days and the solution wasn’t satisfactory – a hand-coded particle effect that could be spawned around the environment as needed. I didn’t like the end result much at all. That was one thing that went to the top of the list for the final.

We had other problems too. In the end, even the best laid plans break down as the deadline nears. I wasn’t travelling to Helsinki and I had to go to a wedding on the saturday morning, so that ruled out real last minute crunches – but somehow we ended up doing that anyway. For the last week before Assembly I got up at 6am and went to work every day, working on the demo on the train and at lunchtime whenever time allowed, and then came home and worked on it through the evening and half the night too. Then I got up the next morning and did it all again. The problem with demo crunches is that unlike work crunches, there’s much less external pressure to do it. For work you know you don’t really have a choice. For a demo you always have at the back of your mind, “I could ditch this right now and it wouldn’t matter”. When you’re exhausted and stressed out in the middle of the night you keep going because you don’t want to give up, you want to get it done and you don’t want to let your team mates (who are also up in the middle of the night working with you) down.

Come the thursday night we still had a lot to do. I took the day off work on friday and worked on it solidly, with 3-4 hours sleep on thursday night and less on friday. We missed the deadline on friday night but after a night and morning of work, come saturday lunch time it was done. All that was left was to hand it in, get refreshed, shower, and drive to the wedding – where I think I looked like a zombie. Then, come the evening, the competition started far away in a hockey stadium in Helsinki. The wedding was in the middle of nowhere so mobile phone reception was poor to non-existant but I managed to go outside into the car park and get a bar – when I finally got the news I had been waiting for by SMS, first from my groupmate and then from a load of other friends who had been watching the competition, either at the party or at home watching the live stream. “What happened?” I asked. The reply came back – “finally we’re going to get the trophy 🙂 “. “Is it close or did we destroy the competition?”, I asked. “Destroy :)” came the answer. I went back inside and enjoyed the rest of the wedding with a grin on my face. It seemed like we had finally done it.