During the making of Frameranger, I spent some time looking into making a “modern particle system”. Particles have been around for ever and ever, and by and large they haven’t changed that much in demos over the last 5-10 years. You simulate the particles (around 1000 – 100,000 of them) on the CPU, animating them using a mix of simple physics, morphs and hardcoded magic; sort them back to front if necessary, and then upload the vertex buffers to the GPU where they get rendered as textured quads or point sprites. The CPU gets hammered by simulation and sorting, and the GPU has to cope with filling all of the alpha blended, textured pixels.

However, particles in the offline rendering / film world have changed a lot. Counts in the millions, amazing rendering, fluid dynamics controlling the motion. Renderers like Krakatoa have produced some amazing images and animations. I spent some time looking around on the internet at all sorts of references and tried to nail down what those renderers had but I didn’t – and therefore needed. This is something I do a lot when developing new effects or demos. Why bother looking at what’s currently done realtime? That’s already been done. 🙂

I decided on the following key things I needed:

1. Particle count. I want more. I want to be able to render sand or smoke or dust with particles. That means millions. 1 million would be a good start.

2. Spawning. Instead of just spawning from a simple emitter, I want to be able to spawn them using images or meshes.

3. Movement. I want to apply fluid dynamics to the particles to make them behave more like smoke or dust. And I want to morph them into things, like meshes or images – not just use the usual attractors and forces.

4. Shading. To look better the particles really need some form of lighting – to look like millions of little things forming a single solid-ish whole, not millions of little things moving randomly and independently.

5. Sorting. Good shading implies not additive blending, which implies sorting.

The problem with simulating particles on the CPU is that no matter how fast the simulation code on the CPU is, you’re going to hit two bottlenecks sooner or later: 1. you have to get that vertex data to the GPU – and that can make you bandwidth limited; and 2. you need to sort the particles back to front if you want to shade them nicely, which gets progressively slower the more you have. Fortunately given shader model 3 and up, it’s quite doable to make a particle system simulate on the GPU. You make big render targets for the particle positions, colours and so on; simulate in a pixel shader; and use vertex texture fetch to read from that texture in the vertex shader and give you an output position. Easy. Not quite – simulating on the GPU brings it’s own set of problems, but on to that later. Modern GPUs are sufficiently fast to easily be able to perform the operations to simulate millions of particles in the pixel shader, and outputting 1 million point sprites from the vertex shader is doable.

Shading is the biggest problem here, and the shading problem is mainly a lighting problem. Lighting for solid objects means a mix of – diffuse+specular reflection; shadows; and global illumination. Computing diffuse and specular reflection requires a normal, something which particles do not really have, unless we fake it. So that was my first line of attack – generate a normal for the particle. It would need to be consistent with the shape of the system, locally and globally, if it was going to give a good lighting approximation. I tried to use the position of the particle to generate a normal. It turns out that’s rather difficult if you’ve got something other than a load of static particles in the shape of a sphere or box. Then I tried to use a mesh as an emitter and use the underlying normal from the mesh for the particle. It did work, but of course once the particle moves away from it’s spawn point it becomes less and less accurate.

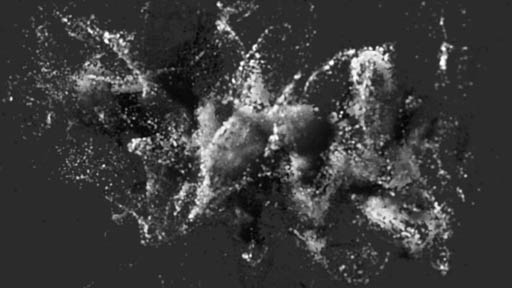

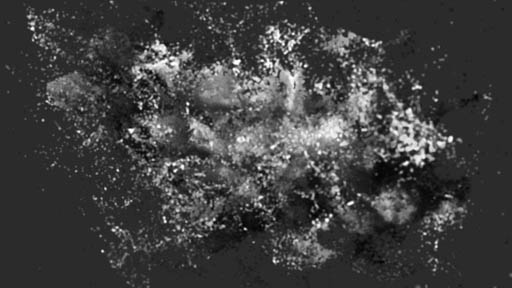

The image here shows particles generated from the car mesh in Frameranger, matching the shading and lighting.

I needed a better reference, so I looked away from solid objects and had a look at how you would light a volumetric object – e.g. a cloud. Which in real life is actually millions of millions of little particles, so maybe it makes quite a good match to lighting, well.. particles. It works out as a model of scattering and absorbtion. You cast light rays into the volume, and ray march through it. Whenever the ray hits a cell that isnt empty, a bit of the light gets absorbed by the cell and a bit of it scattered along secondary rays in different directions, and the rest passes on to the next cell. The cell’s brightness is the amount of light remaining on the ray when it gets to that cell. Scattering properly is hideously slow and expensive so we’ll just completely ignore it, and instead add a global constant to fake it (a good old “ambient” term). That just leaves us with marching rays through the volume and subtracting a small amount per cell, scaled by the amount of stuff in the cell. This actually works great, and I’ve used it for shading realtime smoke simulations – with a few additional constraints, like fixing to directional lights only and from a fixed direction, you can do it pretty efficiently. It looks superb too.

The problem is that the particles are not in a format that is appropriate for ray marching (like a volume texture). But the look is great – we just need a way of achieving it for particles. What we’re dealing with is semi-transparent things casting shadows, so it makes sense to research how to handle that. The efficient way of handling shadows for things nowadays is to use shadow maps. But shadow maps only work for opaque things – they give you the depth of the closest thing at each point in a 2D projection of light space. For alpha things you need more information than that, because otherwise the shadows will be solid.

Or do you? The first thing I tried was very simple – to use exponential shadow maps. Exponential shadowmaps have a great artefact / bug where the shadow seems to fade in close to the caster, and this is usually annoying – but for semi-transparent stuff we can use it to our advantage. Yep, plain old exponential shadowmaps actually work pretty well as shadowmaps for translucent objects – as long as those translucent objects aren’t all that translucent (e.g. smoke volumes). The blur step also makes small casters soften with those around them. It’s pretty fast too, and it almost drops into your regular lighting pipeline. But, for properly transparent (low alpha) stuff like particles, it’s not quite good enough.

The really nice high end offline way is to use deep shadow maps. That basically gives you a function or curve that gives you the shadow intensity at a given depth value. It’s usually generated by buffering up all the values written to each pixel in the map (depth and alpha), sorting them, and fitting a curve to them which is stored. Unfortunately it doesn’t map too well to pixel shader hardware. However there is a discrete version which is much simpler – opacity shadow maps. For this you divide depth into a series of layers and sum up the alpha value sums at each layer for the stuff written with a depth greater than that layer. On modern GPUs that’s actually pretty easy – you can fit 16 layers into 4 MRTs of 4 channels each, and render them in one pass! Unfortunately it’s not expandable beyond that without adding more passes, but it’s good enough to be getting on with – as long as you don’t need to cover a really large depth range and the layers are too spaced out. But this gives us nice shadows which work with semi-transparent stuff properly. You could even do coloured shadows if you didn’t mind less layers or multiple passes.

The next issue is how to apply that shadow information to the particles – it requires samping from 4 maps plus a bunch of maths, and isn’t all that quick. If we did it for every pixel rendered for the particles, it’d hammer the already stressed pixelshader. If the particles are small enough we could just sample it once per particle in the vertex shader – but it’s too many textures to sample. Fortunately the solution is easy – just calculate a colour buffer using the fragment shader, with all the lighting and shading information per particle in it, and sample that in the vertex shader. The great thing about that is it’s really similar in concept to the deferred renderer I’ve already got for solid geometry. You have a buffer containing positions and other information; you perform the lighting in multiple passes, one per light, blending into a composite buffer; then sample that composite buffer to get the particle colour when rendering to the screen. It’s so similar in fact to the deferred rendering pipeline that I can use the almost same lighting code, and even the same shadow maps from solid geometry to apply to the particles too – so particles can cast shadows on geometry, and geometry can cast shadows on particles.

This shading pipeline – compositing first to a buffer, one pixel per particle – opens up new options. We can do all the same tricks we do in deferred rendering, like indexing a lookup table which contains material parameters for example. Or apply environment maps as well as lights. Or perform more complicated operations like using the particle’s life to index a colour lookup texture and change colour over the life of the particle – make it glow at first then fade down. It allows multiple operations to be glued together as separate passes rather than making many combinations of one shader pass.

So, we have a particle colour in a buffer. The next job is to render the particles to the screen. We’ve gone to all this effort to colour them well that we need to consider sorting – back to front – so it actually looks right. This could be problematic – we’ve got 1 million+ particles to sort, all moving independently and potentially quite quickly and randomly, and it has to be done on the GPU not the CPU – we can’t be pulling them back to CPU just to sort.

I had read some papers on sorting on the GPU but I decided it looked totally evil, so I ignored them. My first sorting approach was basically a bucket sort on GPU. I created a series of “buckets” – between 16 and 64 slices the size of the screen, laid out on a 2d texture (which was massive, by the way), with z values from the near to the far plane. Then I rendered the particles to that slice target, and in the vertex shader I worked out which slice fit the particle’s viewspace z value, and offset the output position to be in that slice. So, in one pass I had rendered all the particles to their correct “buckets” – all I had to do was to blend the buckets to the main screen from back to front, and I got a nicely sorted particle render which rendered efficiently – not much slower than not sorting at all. Unfortunately it had some problems – it used an awful lot of VRAM for the slice target, and the granularity of the slices was poor – they were too spread out, so sometimes all the particles would end up in one slice and not be sorted at all. I improved the Z ranges of the slices to fit the approximate (i.e. guessed) bound box of the particle system, but it still didn’t have great precision. In the end on Frameranger the VRAM requirements were simply too high, and I had to drop the effect. It turns out that the layers method is very useful for other things though, like rendering particles into volumes or arbitrary-layered opacity shadow maps.

When I revisted the particle effect, I knew the sorting had to be fixed. I looked back at the papers on GPU sorting, specifically the one in GPU Gems. They seemed very heavyweight – a sort of a 1024×1024 buffer (i.e. 1 million particles) would require 210 passes over that buffer per frame, which is completely unfeasible on a current high end GPU. But there was one line which caught my attention – “This will allow us to use intermediate results of the algorithm that converge to the correct sequence while we do more passes incrementally”. One of the sorting techniques would work over multiple frames – i.e. for each iteration of the algorithm, the results would be more sorted than the previous iteration – it would not give randomly changing orders, but converge on a sorted order. Perfect – we could split the sort over N frames, and it would get better and better each frame. That’s exactly what I did, and it actually worked great. It used much less memory than the bucket sort method and gave better accuracy too – and the performance requirements could be scaled as necessary in exchange for more frames needed to sort.

There are some irritations with simulating particles on GPU. Each particle must be treated independently and you have to perform a whole pass on all the particles simultaneously. It makes things which are trivial on CPU, like counting how many particles you emitted so far that frame, very difficult or not feasible at all on GPU. But it’s a rather important thing to solve – you often need to be able to emit particles slowly over time, rather than all at once. The first way I tried to solve that was to use the location of the particle in the position buffer. I would for example emit the particles in the y range 0 to 0.1 on the first frame, than 0.1 to 0.2 on the next, and so on. It worked to a point, but fell down when I started randomising the particle’s lifetime – I needed to emit different particles at different times. Then I realised something useful. If you’re dealing with loads and loads of something – like a near infinite amount – then doing things randomly is as good as doing things correctly. I.e. I dont need to correctly emit say 100 particles this frame – I just need to try to emit e.g. roughly 1% of particles this frame and if I’ve got enough particles in the first place, it’ll look alright. The trick is that those 1% is the right amount of random.

I’ll explain. The update goes like this: 1. generate a buffer of new potential spawn positions for particles. 2. Update the particle position buffer by reading the old positions, applying the particle velocities to them, and reducing the life; then if the life is less than 0, pick the corresponding value from the spawn position buffer and write that out instead. So, each frame I generate a whole set of spawn positions for the particles, but they only get used if the particle dies. But how to control the emission? Clearly if I put a value in the spawn buffer which has an initial life of less than 0 and it gets used, it’ll get killed by the renderer anyway and the next frame around it’ll respawn again – i.e. the particle never gets rendered and doesn’t really get spawned either. So if I want to control the number of particles emitted I just limit the number of values in the spawn buffer each frame that have an initial life greater than 0.

How do I choose which spawn values have valid lives? It needs to be a good spread, because the emission life is also randomised – some particles die earlier than others and need respawning. If I simply use a rolling window it’s not random enough and particles stop being spawned properly. If I actually randomly choose, it’s too random – it becomes dependent on framerate, and on a fast machine the particles just all get spawned – the randomness makes it run through the buffer too fast. So, what I did was a compromise between them – a random value that slowly changes in a time-dependent way.

The other nice thing about this spawn buffer was that it made it easy to combine multiple emitters. I could render some of the spawn buffer from one emitter, some from other, and it would “just work”. One of the first emitters I tried was a mesh emitter. The obvious way would be to emit particles from the vertices but this only worked well for some meshes – so instead I generated a texture of random positions on the mesh surface. I did this by firstly determining the total area of all the triangles in the mesh; then for each triangle spawning a number of particles, which was the total number of particles * (triangle area / total area). To spawn random positions I just used a random barycentric coordinate.

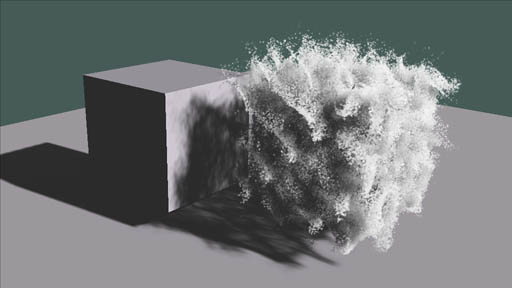

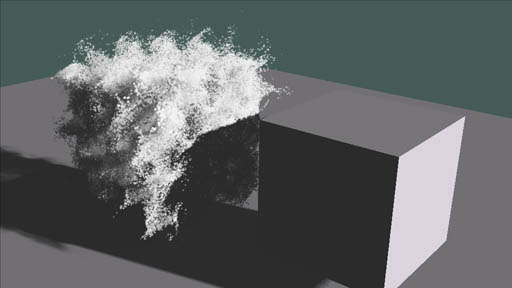

Here’s an early test case with particles generated for a logo mesh and being affected by fluid.

Finally I needed some affectors. Of course I did the usual forces, but I wanted fluid dynamics. The obvious idea was to use a 3d grid solver and drive the particles by the velocities. Well, that wasn’t great. The main problem was that the grid was limited to a small area, and the particles could go anywhere. Besides, the fluid solver was quite slow to update for a decent resolution. So I used a much simpler method that generated much better results – procedural fluid flows (thanks Mr Bridson). Essentially this fakes up a velocity field by using differentials of a perlin-style noise field to generate fluid-like eddies – “curl noise”. By layering several of these on top, combined with some simple velocities, it looked very much like fluid.

The one remaining affector was something to attract particles to images. To do this I generated a texture from the image where each pixel contained the position of the closest filled pixel in the source image – a bit like a distance field but storing the closest position rather than the distance. Then, in the shader I projected the particle into image space, looked up the closest pixel and used that to calculate a velocity, weighted by the distance from the pixel. With a bit of randomness and adjustment to stop it affecting very new or very old particles, it worked a charm.

And there we have it – a “modern” particle system that works on DirectX9 – no CUDA required! I’m sure this will develop over time. With better GPUs the particle counts will go up fast – between 4 and 16 million is workable already on a top end Geforce, and it’ll go up and up with future hardware generations. In fact I have a host of other renderers for the particles besides this simple one – things to do metaballs, volume renders and clouds, for example – and a load of other improvements, but that can wait for another demo..

By the way, there’s a nice thing about GPU particles that maybe isn’t immediately obvious. You’re writing all the behaviour code (emission, affectors..) in shaders, right? And you can probably reload your shaders on the fly in your working environment. All of a sudden it makes development a lot easier. You don’t need to recompile and reload the executable every time you change the code, you can simply edit and reload the shader in the live environment. Great eh?

That fake fluid sim curl noise looks really good and the performance for that amount of particles is impressive. How many particles were you used in Blunderbuss?

Great work!

Comment by Rene Schulte — October 6, 2009 @ 6:13 pm

1 million (well actually 1024×1024.. 🙂 ). altho its only some places where the full amount is used.

Comment by directtovideo — October 7, 2009 @ 7:49 am

The technical achievment is impressive, you’ve combine a lot of technic to reach your goal.

However I thought that you will be forced to code in DX11 ( or 10 ) if you want to gain some flexibility in your particle system ( like a better spawnning control or more complex behavior for each particle ).

Anyway Nice work !

Comment by u2Bleank — October 7, 2009 @ 1:23 pm

you can do it all in dx9.. its just more painful. 🙂

Comment by directtovideo — October 7, 2009 @ 1:25 pm

yes you’re probably right.

It’s just more enjoyable if you can adjust the particle behavior in a language ( like the hlsl ) and modify it on the fly to fine tune the final result whithout the pain to initialize 3 rendertargets with ping pong operatoin between them.

I doesn’t already develop in DX11 ( nor DX10 ) but from what I red it’s seems to be possible with those API.

Comment by u2Bleank — October 7, 2009 @ 3:03 pm

great stuff there smash

Comment by thec — October 7, 2009 @ 7:05 pm

great post! thanks for sharing info on your particle system, it’s gorgeous!

Comment by blackpawn — October 7, 2009 @ 9:12 pm

Wonderful work on this one. So exciting to see “demo scene” work out in the open like this. I really hope the general public start connecting with this because code is art.

Wonderful, Wonderful Work!

Comment by hunz — October 8, 2009 @ 12:20 am

totally amazing!!

but i need to know more if this…do you have a paper published? i want to introduce this in my game engine and i want to know more. like…you got some benchmark?

Comment by Mathieu — October 22, 2009 @ 12:52 am

No paper published on this subject no.. was just doing it for fun. 🙂 But if there is anything you need some information on or help with then let me know.

Comment by directtovideo — October 22, 2009 @ 8:13 am

plsssss publish a paper XD. but anyway, does the particles generated from the meshe (the car) can do it in real time? like transforming a 3d model into smoke in gameplay? does all the particles react to object ? what is the performance hit of 100k particles?

sorry for the noobs questions, but im still a beginner. anyway, again, amazing!

Comment by Mathieu — October 22, 2009 @ 7:57 pm

The particles can be generated in realtime from the car yes. The spawn positions on the car are pre-baked to a texture, but I can e.g. apply transforms and even skinning to the position buffer so that it could spawn from an animated character updating in realtime as well. It’s quite easy to do actually.

Particles are not colliding with objects at the moment, I might add it in future if I need it for something (it’s not too difficult if you have distance fields for the collision objects).

100k particles runs alright even on my laptop’s geforce 8400, so it’s not too much really. 🙂

Comment by directtovideo — October 26, 2009 @ 10:12 am

Wonderful, Wonderful Work!

Comment by Gülşehir Nevşehir Kapadokya — October 25, 2009 @ 8:18 am

[…] Smash, the main coder of Fairlight has published its vision of a modern particle system. […]

Pingback by Demoscene: Blunderbuss Direct3D Demo With One Million Particles | The Geeks Of 3D - 3D Tech News — October 28, 2009 @ 12:40 pm

Awesome!

Comment by Sander van Rossen — October 28, 2009 @ 2:55 pm

Could you explain how distance fields could help with the collision detection? Im struggling to see how this would work (and there has to be cpu-gpu synchronisation, is it efficiently doable with just directx9?)

Comment by martijn — October 29, 2009 @ 10:22 pm

Ok, first you compute a signed distance field representation for your 3D object – most likely stored in a volume texture, although there are some space-saving techniques using 2d textures or cubemaps. Of course if you’re using primitives you can generate the signed distances analytically. If you need to update the objects in realtime you can use volume textures stored as slices on a 2D texture. Generating distance fields for objects on the GPU was a pain, but it’s doable and it’s useful for some other routines too (think CSG, ambient occlusion..). I have a couple of different techniques, one of which is fast and one is accurate. 🙂

Then, when updating the particles you can sample that field for the particle and see if your particle lies inside the object – i.e. distance < 0. To make it more solid, you perform a ray march with N steps from your particle's position along the velocity vector, see if there were any intersections during the particle's movement in the frame, and perform a binary search to find the point where the distance is 0 – the exact intersection point. Then update the position and velocity accordingly. This is of course all easily doable inside a fragment shader – it's just distance field tracing.

Of course like a lot of collision detection problems with moving objects you can have issues with time steps and skipping over space, so you might need to run the collision process iteratively with several steps per update.

Not sure what you mean about the need for CPU/GPU sync – what do you need to get the CPU involved for except to provide the object's transform?

Comment by directtovideo — October 30, 2009 @ 8:56 am

[…] Europa, y su autor publica en su blog un making-of, además de un buen post de explicación sobre como conseguir ese efecto de humo tan […]

Pingback by Ref: S/N » Blog Archive » Máquinas de humo — December 14, 2009 @ 9:31 am

Could there be any possibility for having a look on the source code of this rather marvellous implementation?

Comment by mindbound — December 19, 2009 @ 7:46 pm

Looks really great, especially the innovations in gpu particle system design. Good to see that people still find new and creative ways to improve particle systems.

Could you please elaborate a bit more on how you handle your particle allocation?

I remember particle allocation being the biggest bottleneck in my GPU particle system.

I used the way Latta Lutz described in his whitepaper where he kept track of particle life on the CPU and reallocated them when needed.

Your blog seems to be the only post about GPGPU particles who’se allocation isn’t done on the CPU.

Comment by Hellome — January 14, 2010 @ 9:29 pm

I’ll try to explain the particle allocation better. You are right – it’s a very difficult problem and it took some trickery to solve it.

I store the particle life remaining (and the initial life) in a texture. If the remaining life of a particle gets to 0 the particle won’t be rendered – it’s simply sent off screen in the vertex shader.

During update, I render a “spawn data buffer”. This buffer contains potential spawn positions, lives, colours etc of new particles – the buffer is the same size as the particle position buffer. It can be generated in different ways – e.g. all values could be set to the position of a single point emitter; it could use the vertices of a mesh; or it could be a mix of several spawn sources – they just write in their own areas of the spawn buffer. The values are usually slightly randomised and the “initial life” value is usually randomised too.

Next, when updating the particles (adding velocity to position, decrementing life etc) I check the particle life. If the life is 0 then I read the value from the spawn data buffer for that particle’s uv and write that out as the particle’s position instead – so the particle is respawned at the new location.

There’s an extra control term, though: sometimes I dont want every particle to spawn immediately, I want to emit them slowly over time. What I do is control the initial life values written to the spawn data buffer. If the initial life value for a particle in that buffer is 0 and the particle is respawned, it will still have a life of 0 so it won’t appear and it will try to be respawned again next time. So, I can control the % of particles in the spawn buffer per frame (or indeed, per second) which have life values that are non-zero. As long as the set is changed over time so different particles will be respawned, and the change is done in a way that spawns reliably and smoothly over time, it works. (this can be as simple as, “spawn non-zero lives from y=0 to y=0.1 at frame 0, and move that region slowly in y over time”.

Why does this work? Well, it’s actually quite simple and based on one single assumption: everything is randomised and there are a lot of particles. If you generate an infinite number of random numbers with a really good random number generator, in theory you should end up with the complete set of numbers. If you generate “a lot” of random numbers you should end up with pretty good coverage of the set of numbers, and this is what I assume here: that I have enough particles and things are random enough that in the end it works well enough to do it all randomly. You get the coverage. Is it 100% correct, accurate etc? Nope. Does that matter? Nope. 🙂

Hope that helped.

Comment by directtovideo — January 15, 2010 @ 8:59 am

Thanks for the great explanation, I think I get it now.

I’ll try to alter my particlesystem to use the way you described, perhaps it’ll be more usable than it is now.

With the moving window thing though, wouldn’t sorting your particles make it really inpredicable? Seeing as all your living particles would probably congregate into a part of your texture? Or am I missing something?

Did you use points, point sprites, regular quads or camera aligned quads for your system. Because in my experience points are to limited in appearance. Point sprites are nice until you start rotating their textures. Quads should be more flexible in appearance yet I haven’t been able to make them behave properly.

Another short question though, do you use R2VB, VTF or some other method to change your data in you data textures to vertex data? I simply want to see what you stance is on those techniques.

Comment by Hellome — January 15, 2010 @ 11:44 am

Yes – if you have a slow spawn rate, the living particles probably do congregate in one area. But that doesn’t matter at all – it’s not a problem. If you dont make use of all the available particles thats up to you, you can optimise the system by reducing the texture size and increasing the sliding window rate to compensate.

I use point sprites usually because my particles are small and numerous – a lot of the work is in the vertex shader so I wanted something fast there. I do support texture rotation and it works fine (but has to be performed in the fragment shader on d3d9). I have used billboarded quads (well, pairs of tris) as well which I camera-align in the vertex shader, but I tend to avoid them if point sprites are enough.

I use VTF – it’s efficient on unified shader architectures like the g80 (altho obviously not on pre-g80- hw). I never bothered with r2vb – vtf is fine for me. 🙂

Comment by directtovideo — January 15, 2010 @ 11:52 am

Keep the good work, we have better telepathies with this car stuff !

Comment by Barti — January 15, 2010 @ 10:43 am

This actually reminded me of the first thought that came into my mind when i first learned about 3D in one of my first computers:

That the most real possible way to model stuff in a computer would come down to particles glued together.

Of course that we don’t have the computer power to do that just yet, but i think experiments such as yours do get us in the right direction.

Thanks for sharing 🙂

Comment by Pedro Assuncao — January 21, 2010 @ 8:02 am

[…] a thoroughly modern particle system. « direct to video (tags: graphics 3d gpu physics gamedev) […]

Pingback by links for 2010-01-21 « Blarney Fellow — January 22, 2010 @ 1:32 am

This is really great stuff! It seems like a lot of effects could be pulled off much more realistically with this many particles.

I’m still wondering about one thing – can you explain a bit further about how you do the lighting? How do you render the shadowing information all in one pass?

Comment by Adam — February 14, 2010 @ 5:16 am

Opacity shadow maps is quite simple. You create 4 rendertargets in A16B16G16R16F format and bind them as the 4 MRTs and clear them to black. Then you pick a minimum and maximum z range for the shadow map (i.e. from the light perspective) that cover the range of space occupied the particles – I use a bound box or hand-placed boxes to calculate that. You then divide that range into 16 values – one per layer – and bind those values to the shader as 4 constants (4x float4s).

Next you render the particles as pointsprites with depth test and write off, and alpha blend set to add. In the vertex shader you transform the particle by the light’s viewprojection matrix and pass the depth through to the pixelshader. In the pixelshader you perform a comparision between the depth and the 4 depth range float4 constants you set earlier. You multiply the particle’s alpha by the comparison results and output to the 4 output colours.

So in effect you are additive blending the particle opacity per layer, masked by a check to compare the particle’s light space depth against the layer’s depth. This gives you a sum per opacity shadow layer of the amount of particle mass in front of that layer.

Hope that helps!

Comment by directtovideo — February 15, 2010 @ 9:14 am

Firstly thank you for this interesting article, your combining many topics in a understandable way – it was a pleasure to read!

I have a question regarding your approach to composite the shadow information into a colour buffer. You say, that sampling once per particle would result in too many texture fetches per vertice (or did i missunderstand this one?). But how does compositing from the 16 layers into a colour buffer speed up this process? wouldnt i have to do the same amount of texture lookups for every colour buffer pixel (aka every particle)?

Comment by JDee — March 22, 2010 @ 3:07 pm

I think i got the point by myself. I was overlooking the slowness of texture lookups in the vertex shader. I think unified shaders/sm 4.0 are neglecting this point a little bit but the possibility to composite several effects “on top” of each other still remains.

Comment by JDee — March 22, 2010 @ 4:18 pm

The key point is the difference between sampling the shadow map layers once per particle as I do, and once per pixel drawn to the screen if you sampled during the final rendering phase. Normally you’d get many times more pixels drawn than you have particles, so it would be a lot slower to do it there.

Comment by directtovideo — March 23, 2010 @ 8:50 am

Thanks for this thorough write-up of your technique, I honestly think you have done an amazing job.

I am trying to do something similar myself and I was wondering about how you created the noise needed for the curl-noise technique. Are you just using a pre-generated perlin noise texture or do you generate the noise on the gpu somehow, if so how do you manage to do it fast enough?

Comment by Jens Fursund — May 17, 2010 @ 7:45 am

The noise is generated on the GPU using small 1d lookup textures. It’s not exactly perlin noise, it’s a bit simpler – you dont need the octave loop for example. I took a few shortcuts to optimise it down, of course.

The shader is still quite long though. It weighs in at several hundred cycles although most of that is arithmetic. Good job GPUs are good at it.. 🙂

Comment by directtovideo — May 17, 2010 @ 8:07 am

Thanks for the quick reply… though I didn’t see it before now. Thought I had subscribed to the thread, but I guess the mail didn’t come through.

Interesting that you do the noise on the GPU, most take some effort to make it optimal.

Comment by Jens Fursund — May 19, 2010 @ 3:48 pm

[…] animated even if somes are invisibles. You can find some useful information form this articles https://directtovideo.wordpress.com/2009/10/06/a-thoroughly-modern-particle-system/ Ideally It would be great to have render to texture that support floating point for webGL, but […]

Pingback by Cedric Pinson – OpenGL / OpenSceneGraph / WebGL » Blog Archive » WeblGL Particles — August 17, 2010 @ 1:30 am

A question: you said the shading works like a deferred renderer, so you only shade in screen space after you’ve placed all the particles and colored them properly. Does this mean you don’t have any transparent particles or multisampling? As far as I knew, you needed exactly one fragment per pixel for deferred rendering.

Comment by Tim — August 21, 2010 @ 4:54 pm

By deferred shading i mean – I apply it to an accumulation buffer but in particle space – one pixel per particle. The final point sprite render uses one colour value per particle.

Comment by directtovideo — August 22, 2010 @ 9:44 am

Why an accumulation buffer?

Comment by Tim — August 23, 2010 @ 6:02 pm

You have to accumulate the values for multiple lights to a buffer to get a final light result before applying the material and colour to it and getting a final particle colour.

Comment by directtovideo — November 15, 2010 @ 9:40 am

[…] The work made by these guys is amazing, but more important is that they talk about all the methods used in their works, so you can start searching by your own if you like to do something like. In our case we wanted to make some particles animations like the ones found here, and they have the perfect starting point in this post. […]

Pingback by Curl Noise + Volume Shadow particles « miaumiau interactive studio — August 5, 2011 @ 5:43 pm

Just had a read through your article and what a fascinating read it was! I’m not a graphics programmer, but i think i was able to follow most of it. Thank you for sharing this with us, particularly how you’ve solved the shadowing problem for your particles. 🙂

Comment by DDas (@DDas) — August 15, 2011 @ 5:08 am

Hey! nice work.

I am working in something very similar. I have two questions: do you use a time-varying noise function? and do you use a 3-dimensional Perlin noise texture ( grid ) or only 3 2D Perlin noise textures ( one for each component ) ? I am not sure if 3 Perlin textures can generate a good curl.

Comment by Marcel Stockli — December 10, 2011 @ 7:03 am

Oh! i just noticed that you don’t need dX/dx to calculate the curl, 3 perlin noise texture should work.

Comment by Marcel Stockli — December 10, 2011 @ 7:46 am

We use curl noise, not perlin, although it’s evaulated a bit like classic perlin – using a 1d texture of coefficients. For 3d curl noise we use 3 separate 1d lookup textures. The time varying works by changing the contents of the 1d texture.

Comment by directtovideo — December 12, 2011 @ 3:18 pm

[…] 另外smash大神的这篇文章也很值得一看,我基本上就是照着他做的,只是他说的bitonic sort会打乱已排序粒子的顺序,因此不适合做成progressive的, 所以我改用了CLRS上的那个排序网络。 […]

Pingback by Fluid Motion by Curl Noise | 编程·早晨 — June 21, 2012 @ 8:23 pm

[…] Particle Shadows, 知道了curl noise(尽管这不是这篇文章的重点), 于是又翻到了a thoroughly modern particle system,因为作者是用d3d9实现的,而且没有像nvidia那篇一样用了cuda, […]

Pingback by 这大半年来做的事 | Code Play — April 25, 2013 @ 8:46 am